Running Components Outside Docker

When profiling or looking for memory leaks it can be helpful to be able to run some of the DCS components outside of docker.

The easiest way to do this is to keep running the third-party components in docker, such as redis and dapr, and only run the components you wish to profile or analyze for memory leaks directly from visual studio.

Below is an example of how this can be done for the backend component

GlobalDcc.Backend.Server

Step by step guide

-

Configure the backend to run:

- Copy all the environment variables for the

backendservice defined indocker-compose.ymlto thelaunchSettings.jsonin theGlobalDcc.Backend.Serverproject. Below is an example where I've inserted the arguments from the .env file, and changed the assets path to a different local path. Notice also thatDaprServiceis set tolocalhost{

"profiles": {

"GlobalDcc.Backend.Server": {

"commandName": "Project",

"environmentVariables": {

"ASPNETCORE_ENVIRONMENT": "Development",

"DAPR_HTTP_PORT": "3600",

"DAPR_GRPC_PORT": "51000",

"DaprService": "localhost",

"GlobalDccBackendConfig__Common__Cache__Dcc__ConnectionString": "localhost:6379",

"GlobalDccBackendConfig__Common__Cache__Dcc__CacheType": "Redis",

"GlobalDccBackendConfig__Common__Cache__StorageComponent": "state",

"GlobalDccBackendConfig__Common__Dapr__PubSub": "pubsub",

"GlobalDccBackendConfig__Common__Dapr__PubSubQueue": "pubsub-queue",

"GlobalDccBackendConfig__Common__Dapr__State": "state",

"GlobalDccBackendConfig__RoadNetId": "DK",

"GlobalDccBackendConfig__DataFolderPath": "D:\\gdcc_assets\\UK",

"Kestrel__Endpoints__Http__Url": "http://0.0.0.0:5022"

},

"nativeDebugging": true

},

"Docker Backend": {

"commandName": "Docker",

"DockerfileRunArguments": "--name dcc-backend"

}

}

} - Comment out the configuration of the backend service from

docker-compose.yml

- Copy all the environment variables for the

-

Enable the relevant components in the docker component to communicate with the backend. In this case that's just

backend-dapr-

In docker-compose.yml expose its http and grpc port:

backend-dapr:

<<: *dapr-service

ports:

- $DaprHttpPort:$DaprHttpPort

- $DaprGrpcPort:$DaprGrpcPort -

Change any references to the

backendnetwork tohost.docker.internal- At the time of writing this was just the argument for

"

--app-channel-address" - You will probably also need to poke a hole in your firewall, to allow

docker to talk to the backend server. For me that required two steps:

-

With powershell running as admin enable tcp traffic to the backend's port, 5022:

New-NetFirewallRule -DisplayName "Allow Port 5022" -Direction Inbound -Protocol TCP -LocalPort 5022 -Action Allow -

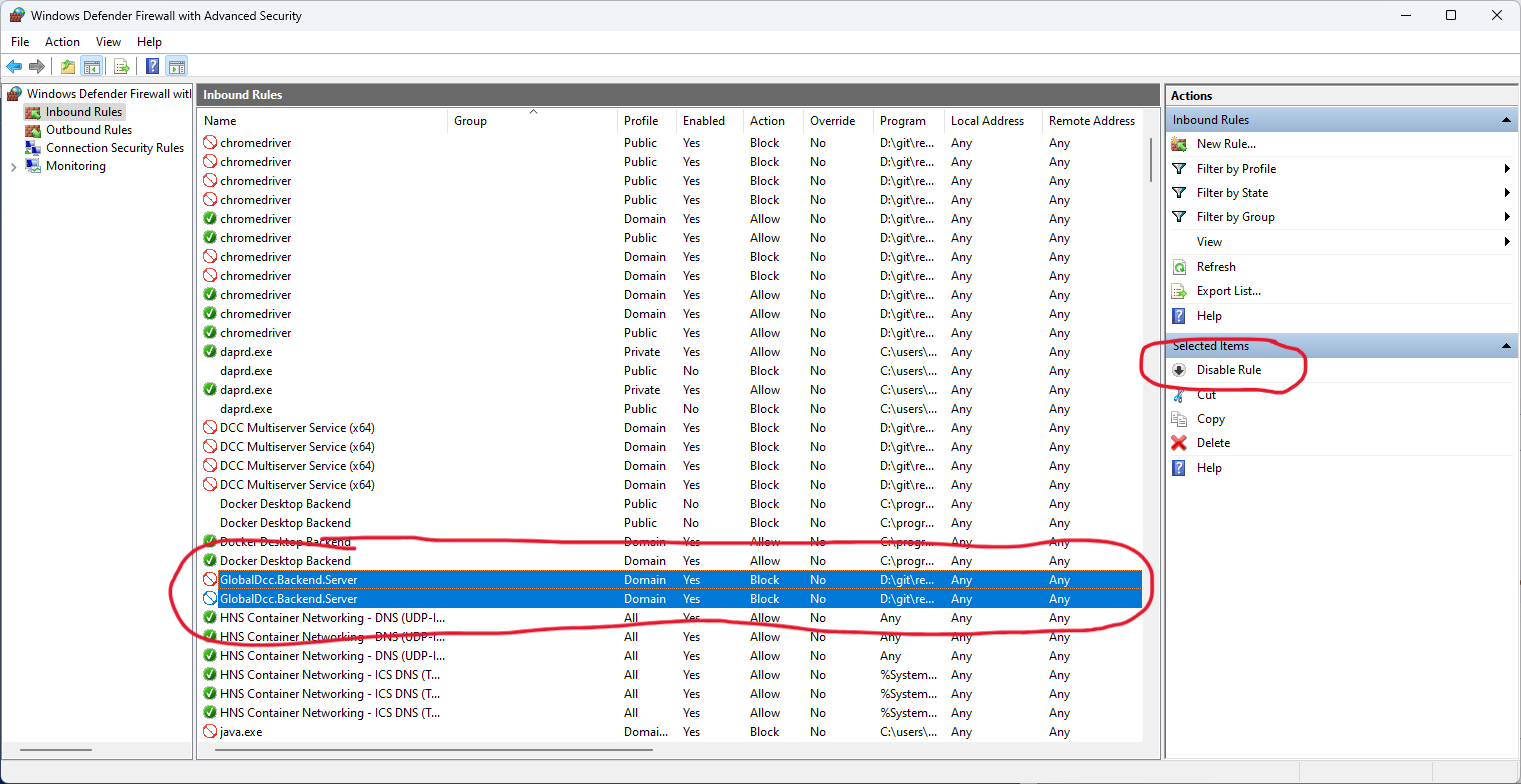

With powershell running as admin, open windows firewall with advanced settings, and disable any rules explicilty blocking the backend server's traffic.

wf.msc- Look for rules like in the image below and just disable them:

- Look for rules like in the image below and just disable them:

-

- At the time of writing this was just the argument for

"

-

If you are using other components, such as redis or sqlserver, remember to expose their ports as well.

-

-

Start the docker components, by right-clicking the

docker-composeproject and selectingCompose Up -

Start the backend server (it will throw a lot of exceptions if it can't connect to the dapr container)

-

Test that the dapr components can communicate with the backend

- In docker desktop click on the "backed-dapr-1" container

- Select the "Exec"-tab.

- Run the following command and see that it doesn't time out

curl http://host.docker.internal:5022/health/live - If it times out, it's probably some firewall rule that's still blocking traffic.

With these steps you'll be able to use visual studios profiling tools to both analyze heap usage and for cpu-profiling.